Update 280Hehe wow - when you've paved the road with robust solutions it's amazing how quickly everything comes together in the end. Now I have the path finding and creature abstraction done, it seems! It isn't throwing errors at me any more! And way too soon, because I haven't had any time to think about which item to move on to

What I should do is to adapt this whole path finding/abstract space system to accommodate for creature dens as well, so that creatures will be able to go hibernate and the like. But frankly I'm a bit bored by this stuff now, and the dens can wait a little while.

The next thing that seems reasonable to get to is some kind of generic AI system. That would be the very basics of an AI, the core that's shared between all creatures. One thing I know this system should incorporate is the "brain ghost" system, where creatures upon seeing other creatures will create a symbol for that creature that can move around with slight autonomy even after the creature is no longer seen.

This is the core mechanic that makes it possible to trick the enemies in Rain World. If a lizard sees you, it will create a ghost of you, and as long as you're in its field of vision that ghost will always be fixed at your position. But the moment you get behind a corner, the ghost will start to move based on the lizard's assumptions of how you'd move (based on things such as movement direction on last visual) and this is your chance. The lizard AI is only ever able to ask for the ghosts position, never the actual player's. This means that if you're diverging from the path the lizard assume you'd take, you have a chance to trick it.

This system is going to be common for all creatures, so that's something I could get at right away. The problem is that I have a slightly more complex environment this time compared to the lingo build - for example it's not possible to know how many creatures are going to be in the room and needing ghosts this time around, as creatures are allowed to move freely. Nothing that can't be helped by an hour or two in the thinking chair.

All of this is just "data collection" though, the system that will provide the AI with the information on which it'll base its decisions. The decision making process itself is an entirely different matter.

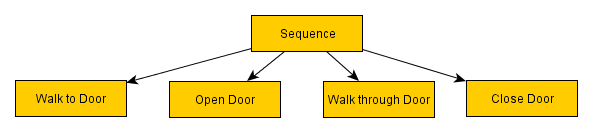

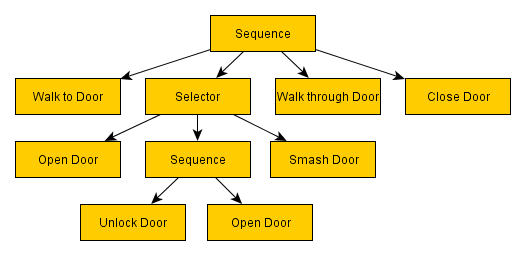

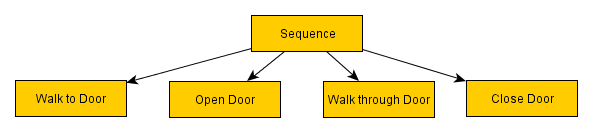

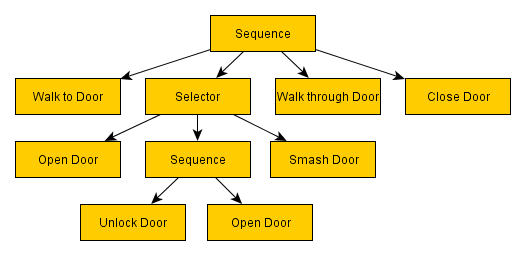

A

Tree solution was posted a page or two back, and I really liked it. But I've also been thinking about a

Pushdown Automata Finite State Machine kind of solution, where different modes are defined as behavior modules that can be stacked on top of each other. This would basically be a list of tasks, where the topmost item would be handled until it reached a success or failure, in which case the next one would take over. The difference from an ordinary to-do list is that the modules would be self-sufficient classes actually executing the behavior themselves, and also saving their state in case they got postponed, allowing them to pick up where they left off.

In a way, you could say that the Pushdown Automata is a subsystem of the Tree, as it could be created within the tree if you used a Sequence node and allowed it to be dynamically change its "playlist" during play...

While I'm rambling, one thing that I don't quite like with the Tree is that it has the AI character actively

try every solution before moving on to the next one. If there's a lot of walking involved, this could mean that the character could spend several minutes executing some chain of actions where it's very obvious that the last one won't carry through.

(Images nicked from here)

(Images nicked from here)Say that the "open door" node will return a fail. The NPC will still have walked to the door which might not be a big problem in a small world with fast-paced gameplay. But in a large world Frodo and Sam might set out on their epic pilgrimage for three movies just to arrive at mt. doom and face the fact that they left their mountain climbing shoes back home, and that's pretty stupid. Potentially you could circumvent this problem by first running through the tree with an "assumption" cursor, which will make an educated guess on whether or not each action in the sequence will succeed, and only do the actual run-through if that's the case. This sort of defeats the purpose of the architecture though ...

Another thing to take into consideration is that the tasks the RW AI will handle looks very little like this:

Rain World contains extremely few puzzle-like situations where items are needed to traverse obstacles and the like. This layout is perfect for complex puzzles where several interactions are each unlocking the other until a final goal is reached.

The problems in Rain World are much more... fluid than this. Each creature is pretty much always free to move to wherever it wants without having to collect any keys or hit any switches. The only things that can restrain movement is being speared to a wall or held by another creature, neither of which there is really anything to

do about except wiggle and squirm.

The Tree solution seems ideal for overcoming geographical obstacles in order to obtain items that can help overcome further geographical obstacles. RW has very little of both these elements, and for NPS's, almost none.

So what does a Rain World creature need to think about? It needs to weight many options, none of which are simply "possible" or "impossible". It needs to do this with limited information as well, being able to account for uncertainty. I imagine a typical rain world lizard problem something like this:

"I currently see no slugcats. I

have seen two on this level though (Ghosts are still in memory). One I saw 240 ticks ago in a position 30 tiles removed from me. The other one I saw 20 ticks ago just 10 tiles away, but it was holding a spear. Which one do I go after?"

Another might be:

"Room A have three edible creatures in it, but also a creature that considers me as edible. Room B has just one edible creature in it, but my own skin would be safe. Which one do I go in?"

My immediate idea is to somehow construct "plans" for what to do, and for each plan calculate a "good idea" value based on the known information.

Plan: Follow slugcat A! [Slugcat deliciousness: +40pts] [Distance to target: 20 tiles -> -20pts] [Time since I saw this slugcat: Currently looking at it! -> +30pts] [Time till rain: Starting to feel a little uneasy about the rain -> -10pts] Total points: 40pts

Plan: Follow slugcat B! [Slugcat deliciousness: +40pts] [Distance to target: 10 tiles -> -10pts] [Time since I saw this slugcat: 100 ticks -> -10pts] [Time till rain: Starting to feel a little uneasy about the rain -> -10pts] Total points: 10pts

Plan: Go home to den! [Time till rain: Starting to feel a little uneasy about the rain -> +10pts] Total points: 10pts

Decision: Follow slugcat A!

As the rain got closer, the "go home" option would appear more and more attractive until it became the winner of the evaluation. The main problem I can see with a system like this is that it could lead to flickering back and forth between behaviors without actually carrying any of them through. This could perhaps be circumvented by having the evaluation be delayed for a little while after a decision has been made, but that in turn would make the creature look slow to react in some situations. Maybe the evaluation could be tied to some event...

What do you think? If you guys want to give me some reading on AI that would be much appreciated! (Looking at you, Gimym JIMBERT)

however still worth looking at

however still worth looking at

—

—

)

)