Having watched the video, I think your most jarring transitions are the ones where the two screens have some overlap. For example, 0:10 transition and especially the 0:43 transition. The most comfortable are the ones where the character goes to the full edge of the screen, and then teleports to the opposite edge of a completely new screen. For example at 0:30. This is the traditional "flip screen" behavior that many games have had and that gamers are fairly familiar with.

Probably what makes the first type bad is that it is unexpected. With the second type, as I approach the edge of the screen as a player I know something has got to happen, since before long I will be off the screen entirely. The player can anticipate the event. And the player knows where to look to find their avatar on the new screen, since there is a consistent behavior.

Watching the tower ascent starting at 1:00, it actually works pretty well. The rule of predictability is maintained, and the small overlap between the screens (usually it's a horizontal bar that is visible on both) seems to work well. (Though, the small camera movement right before each camera switch seems unnecessary and weird.)

However, in my own game I started with flip screen and later added a sliding transition, similar to what one sees in zelda. I feel this is actually superior since the player is able to watch their character the whole time, and there is a short time period where the player input is disabled (during the transition) and this allows players to let go of the controls, or get ready to start moving, after they have already seen the new room briefly.

Your choice on the last point though, as many good games have used flip screen and players can easily cope with it, as long as it is done in a consistent way.

Very good points all of these, and they generally line up with my experience as I've been trying these systems out. I think the best would be to have it switch when you reach the edge of the screen horizontally, because then you expect it to switch. The main problem here is that we're supposed to support both wide-screen and non-widescreen formats...

4000 (4096 in fact) isn't unity limitation, it's the physical limit of gpu's shaders, also might not work on all card who don't accept such large texture. You should look into things like virtual texture techniques to know how to handle arbitrary large texture (amplify use something like that).

There is certainly way to do it since there is many unity 2d game that does the heavy large large levels. Maybe rendering everything in a single shader is not the best solution? Try to post about it in unity3D forums!

http://forum.unity3d.comSet a thread in showcase too, maybe unity themselves will propose to help, it happens.

I was having a problem with an extremely large texture myself once. I was using a big sprite sheet (2080*1280) with 160px*160px pre-rendered 3d sprites, and I bound the whole thing to a single texture using OpenGL, but it wouldn't work on older computers. So yeah, it's a GPU thing. The solution was to cut it up into individual textures after loading. Perhaps you should do something similar? 512x512 chunks drawn in a grid?

Yeah, I could just cut it up in smaller chunks. That wouldn't help with the enormous files size and RAM usage though. Plus the dynamic shadow issue, etc. The situation isn't really that I

can't make it work (you've seen the gif, I did make it work already

) as much as that I don't think it will be worth it. On the one side we have a cool transition, on the other we have a huge work load on the processor, on the gpu, on me, a huge increase in file size and RAM usage, a terrible logistic situation with 30 files per level (or more, if I cut them up), the possibility that the already implemented dynamic shadows would have to go, etc.

The dynamic shadows are visible all the time - the smooth camera movement would only be visible during very short intervals. It would still chew framerate all the time, though.

That said, 30 layers? H-How? Do you have a single background element, like a chain, on each layer? If so couldn't you save such things as a sprite and set their position and depth through a map script on load? Or is it more like 10*(texture map+depth map+normal map)?

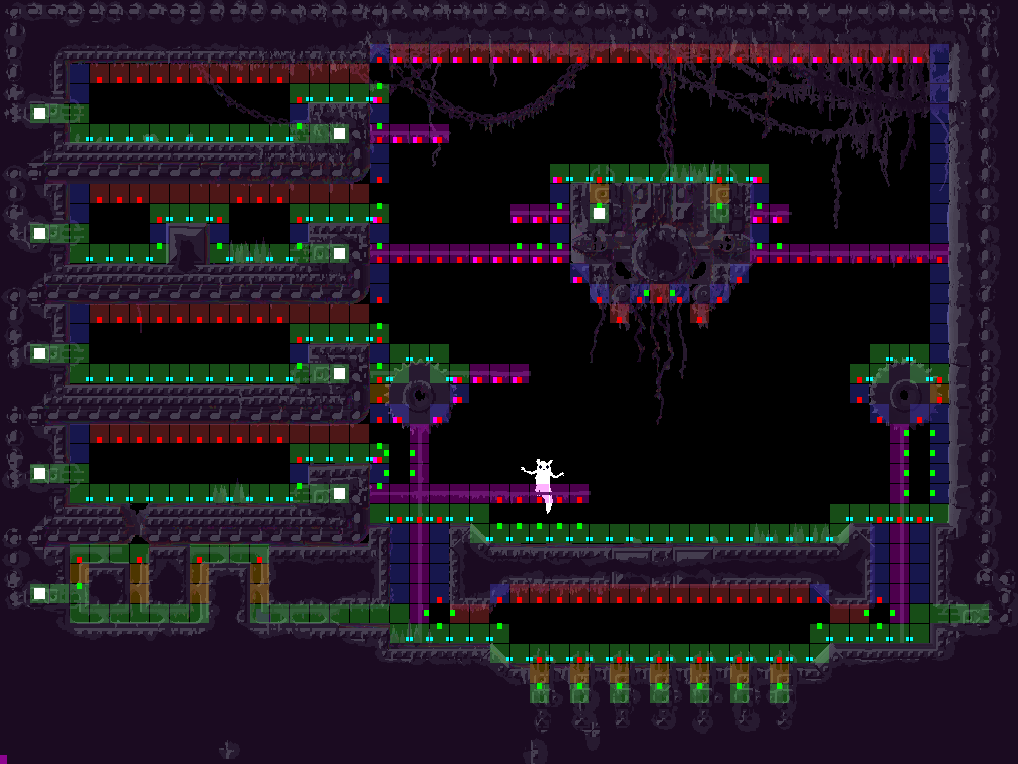

Think of it more like a voxel matrix, or a 3D texture, and it makes more sense. Then each screen would be 1400*800*30 pixels. I guess that to most people the game looks very 2D, but if you look closely at some screenshots you'll notice that on the left side, you can see the inside of the wall, and on the right side too. That's the perspective in action. So the game is slightly 2.5D, perhaps.

If I were to make everything separate sprites, the main problem wouldn't be chains and plants etc (though even those alone wouldn't really amount to 30, they'd rather be in the hundreds) but the fact that a standard tile is 10 sprites on top of each other. A standard level will have something like 7000 tiles in it, so that'd be 70 000 sprites, chains not counted

Gah, you guys make me want to go back and try to solve this again! I'll talk to James though, maybe it will get a re-visiting but I should probably make some actual game mechanics before I dive too deep into the cosmetic stuff. In either case this latest big update has brought dynamic level sizes with it, and that will always be a necessary feature, no matter how we chose to display it

A quick question to you programming people out there - is there a rule of thumb to what is best, saving data or calculating on the fly?

Currently I'm working with an AI map, a class that's attached to a level and which can be asked by AI entities for necessary information concerning specific tiles. Such information could be if the tile is a floor(on top of a solid tile), or if it has any special paths (don't know what to call them, a special path would be for example "if a creature drops down from this specific climbable tile, it will land on this specific floor tile"). In short, these queries will require asking neighboring tiles for their properties.

Now I have two options - either I save all this information to the tile map on loading the level, or I calculate it every time I'm asked for it.

I understand that this basically is a decision between burdening the processor or the RAM, but maybe the burdens would be drastically disproportionate in favor of one solution?

—

—

) as much as that I don't think it will be worth it. On the one side we have a cool transition, on the other we have a huge work load on the processor, on the gpu, on me, a huge increase in file size and RAM usage, a terrible logistic situation with 30 files per level (or more, if I cut them up), the possibility that the already implemented dynamic shadows would have to go, etc.

) as much as that I don't think it will be worth it. On the one side we have a cool transition, on the other we have a huge work load on the processor, on the gpu, on me, a huge increase in file size and RAM usage, a terrible logistic situation with 30 files per level (or more, if I cut them up), the possibility that the already implemented dynamic shadows would have to go, etc.